Pulling On An Abstracted Cloudy Thread(s)

The Critical Role Of Thread Efficiency In Software Application Design & Engineering

We have gone from dedicated, often on premises then data-centre based servers to first Virtual Machines such as VMWare. Next we went to Cloud-Based Infrastructure in systems like AWS, Azure, Digital Ocean, Google etc.

On top of this we then added further abstractions such as Docker, Kubernetes etc. All of these things, whilst no doubt more convenient, have made it increasingly more difficult to track and optimize thread behaviour.

In addition we have added widespread API usage

Threads are a fundamental concept in software programming that allow a program to perform multiple tasks simultaneously. In Java, which ColdFusion has run on since 2001-2002, thread management is particularly critical. This is because Java relies on efficient operability with operating system (O/S) threads because it runs in a virtual encapsulated environment called the Java Virtual Machine or JVM, hence “Write Once Run Anywhere”. So understanding how threads interact with hardware like CPUs or GPUs involves breaking down the layers of abstraction between software and hardware:

1. Threads in Software

Threads are smaller units of execution within a process. A process can contain multiple threads that share resources like memory, but each thread executes independently. Threads are managed by the operating system (O/S) and the software application itself. If we encounter issues where threads are not released efficiently we can often incur out of memory problems.

2. Threads and CPUs

When threads are created in software, they are scheduled by the OS to run on a CPU core. Here's the interaction process:

Thread Scheduling

Thread Scheduler: The OS has a thread scheduler that decides which threads run on which CPU cores and when. This scheduling is based on algorithms that optimize for responsiveness, throughput, or power efficiency.

Context Switching: If more threads exist than available CPU cores, the OS uses context switching to alternate between threads, giving the illusion of parallelism on a single core.

Execution on CPU Cores

Each CPU core can execute one thread at a time (or more with technologies like Hyper-Threading, which simulates multiple logical cores on a single physical core).

The thread's instructions are fetched, decoded, and executed by the CPU's pipeline.

Thread States

Threads can be in states like running, waiting (for I/O or a lock), or ready (queued for CPU time).

The hardware is abstracted from these states; the OS handles them.

As the saying goes, “everything is governed by the lowest common denominator” This is a good article on network capacity planning which is key to the next section.

3. Latency in Inter-Thread Communication

Context:

Modern distributed computing frameworks (like Apache Spark or TensorFlow) spread computation across multiple VMs or containers. Threads often communicate over network layers instead of shared memory.

Impact:

Network Latency: Communication between threads running on different physical machines is significantly slower than communication within a single machine.

Serialization Overhead: Data exchanged between threads must be serialized and deserialized, which adds latency.

Example Evidence:

In distributed databases or real-time analytics platforms, inter-thread communication across VMs can introduce up to 10x higher latency compared to running on dedicated hardware.

4. Inconsistent Hardware Access

Context:

Cloud providers abstract hardware details to provide standardized environments. This abstraction can obscure the nuances of the underlying hardware. (See the caricature above).

Impact:

CPU Affinity Issues: Threads may not stay bound to the same physical core across scheduling cycles, which can impact cache performance.

Lack of Direct Control: Developers lose fine-grained control over thread placement and hardware features like NUMA (Non-Uniform Memory Access), which can impact multi-threaded application performance.

Example Evidence:

Performance profiling of multi-threaded applications in cloud environments has shown degradation due to cache misses and memory access overhead when threads are moved across cores by the hypervisor.

5. GPU Threading in Virtualized Environments

Context:

GPUs in cloud environments are often shared among multiple users or workloads.

Impact:

Virtualized GPU Scheduling: Hypervisors like NVIDIA’s vGPU or AMD’s MxGPU manage GPU threads. This adds a scheduling layer that might not be optimized for certain workloads, reducing the GPU’s parallelism advantage.

Overhead from Virtual I/O: GPU memory access is virtualized, which adds latency.

Example Evidence:

Studies on GPU virtualization in clouds report up to 30% performance degradation for GPU-intensive workloads compared to bare-metal execution.

This is a good insight into NVIDIA GPU’s and how they operate.

6. Debugging Complexity

Context:

With deep abstraction, tracing performance issues in thread execution becomes more complex.

Impact:

Tools like profilers and debuggers often lack visibility across all abstraction layers, making it harder to pinpoint issues like deadlocks, race conditions, or contention.

Hypervisor-level thread issues might appear as application-level slowdowns, confusing developers.

Example Evidence:

Anecdotal reports from cloud users indicate difficulty in diagnosing thread bottlenecks in large-scale, distributed microservice architectures due to limited visibility into the underlying infrastructure.

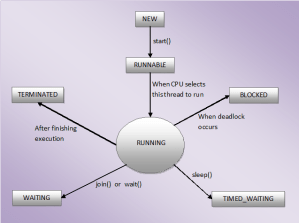

Specific To Java Thread States

In the Java Virtual Machine (JVM), a thread can exist in several states during its lifecycle. These states are defined in the java.lang.Thread.State enum, and they represent the various phases of a thread’s execution. Here’s a detailed explanation of each state:

1. NEW

Description: The thread is created but hasn’t started yet.

When: A thread is in the

NEWstate immediately after its creation using theThreadclass but before thestart()method is called.Example:

javaCopy code

Thread thread = new Thread(); // NEW state

2. RUNNABLE

Description: The thread is ready to run and is waiting for CPU time. It may be actively executing or waiting in the thread scheduler queue.

When: After the

start()method is called, the thread transitions to theRUNNABLEstate. It doesn't mean the thread is actively running; the JVM's thread scheduler determines when it gets CPU time.Example:

javaCopy code

thread.start(); // RUNNABLE state

3. BLOCKED

Description: The thread is waiting for a monitor lock (e.g.,

synchronized) to become available.When: A thread enters the

BLOCKEDstate when it attempts to access a critical section or object but another thread is already holding the lock.Example:

javaCopy code

synchronized(lockObject) { // Another thread trying to access this block will be BLOCKED }

4. WAITING

Description: The thread is indefinitely waiting for another thread to perform a specific action (e.g., notify it).

When: A thread enters the

WAITINGstate when methods likeObject.wait()orThread.join()are called without a timeout.How it leaves: It remains in this state until another thread invokes

notify()ornotifyAll()on the object it's waiting for, or the thread it’s joined with finishes execution.Example:

javaCopy code

synchronized(lockObject) { lockObject.wait(); // WAITING state }

5. TIMED_WAITING

Description: The thread is waiting for a specified amount of time for an action to complete or for another thread’s signal.

When: A thread enters the

TIMED_WAITINGstate when time-limited methods likeThread.sleep(),join(timeout), orwait(timeout)are used.How it leaves: It moves out of this state after the timeout expires or when it’s notified (if applicable).

Example:

javaCopy code

Thread.sleep(1000); // TIMED_WAITING state for 1 second

6. TERMINATED

Description: The thread has finished execution.

When: A thread enters the

TERMINATEDstate after itsrun()orcall()method completes.Example:

javaCopy code

thread.run(); // After completing execution, it transitions to TERMINATED

This is a good article on threads and blocking in Java.

Visual Thread Lifecycle

Here's a simplified view of how threads transition between states:

rustCopy code

NEW --> RUNNABLE <--> BLOCKED RUNNABLE --> WAITING RUNNABLE --> TIMED_WAITING RUNNABLE --> TERMINATED

Key Points

A thread can frequently transition between

RUNNABLE,BLOCKED,WAITING, andTIMED_WAITINGduring its lifecycle.The actual execution of a thread in the

RUNNABLEstate is determined by the JVM's thread scheduler and underlying operating system.API Usage Increase

The use of Application Programming Interfaces (APIs) has surged in recent years, becoming integral to modern software development. While it's challenging to pinpoint the exact number of APIs in existence due to their vast and ever-growing nature, several indicators highlight their widespread adoption:

Public APIs: RapidAPI, a leading API marketplace, hosts over 14,000 public APIs and handles approximately 400 billion API calls per month.

API Traffic Growth: The healthcare sector experienced a remarkable 400% increase in API traffic in 2020, underscoring the critical role of APIs in scaling services during the COVID-19 pandemic.

Developer Engagement: A significant majority of developers are integrating APIs into their workflows:

75% utilize internal APIs.

54% use third-party APIs.

49% engage with partner-facing APIs.

These statistics illustrate the pervasive and growing reliance on APIs across various industries, highlighting their essential role in enabling seamless integration and functionality in today's software applications.

Mitigation Strategies

Despite these challenges, various techniques can help mitigate detrimental impacts on thread management in deeply abstracted environments:

Thread Affinity and Pinning: Configure vCPUs to have dedicated physical CPU cores, reducing context-switching overhead.

Performance Monitoring: Use advanced monitoring tools (e.g., AWS CloudWatch, Google Cloud Trace) to detect resource contention and latency issues.

Optimize Resource Allocation: Right-size VM and container configurations to avoid over-allocation of threads relative to available hardware.

Hybrid Deployments: For latency-sensitive workloads, consider a hybrid model that pairs cloud resources with on-premises bare-metal hardware.

Test - Test - Test: It has become ever more critical to thoroughly test all code before deployment to production. Our tool of choice is JMeter which gets ever more capable as time passes. More details on using JMeter can be found here.

Conclusion

In conclusion, while the deep abstraction of cloud computing offers immense flexibility, it introduces challenges that can detrimentally affect thread management. These impacts are more pronounced for performance-sensitive, thread-intensive, or real-time applications. Awareness of these trade-offs and adopting best practices can help mitigate the downsides.

In our coming posts we will dig much deeper into thread management effects as this is a truly elemental and impactful issue. Thank you for reading this and more soon…

This is another good video on CPU's Caches etc https://www.youtube.com/watch?v=SAk-6gVkio0

This video is very well worth watching from a CPU-GPU standpoint https://www.youtube.com/watch?v=wBqfzj6CEzI